To nest, or not to nest that is the question!

Recently, a friend of mine and I were both rebuilding our lab environments. He is using a dedicated server with with 64GB RAM and a solid CPU, and installed Proxmox as the OS/Hypervisor. My approach, as it seems it always has been, is to use Windows 11 with VMware Workstation to host the hypervisors, which effectively nests them.

It sparked thoughts about the advantages or disadvantages of either approach. I remember when I was trying to do VXLAN nested and ran into some issues, but eventually sorted them out. When we were discussing it, I actually thought, "Why bother doing it this way? Is it more complex? Does performance suffer?" My intent with this article is to illustrate what I like about my approach. However, I don't consider it the right or best way; it's the right and best way for me. Maybe someone with similar requirements will find it useful. Again I am not talking enterprise production, this is for lab use.

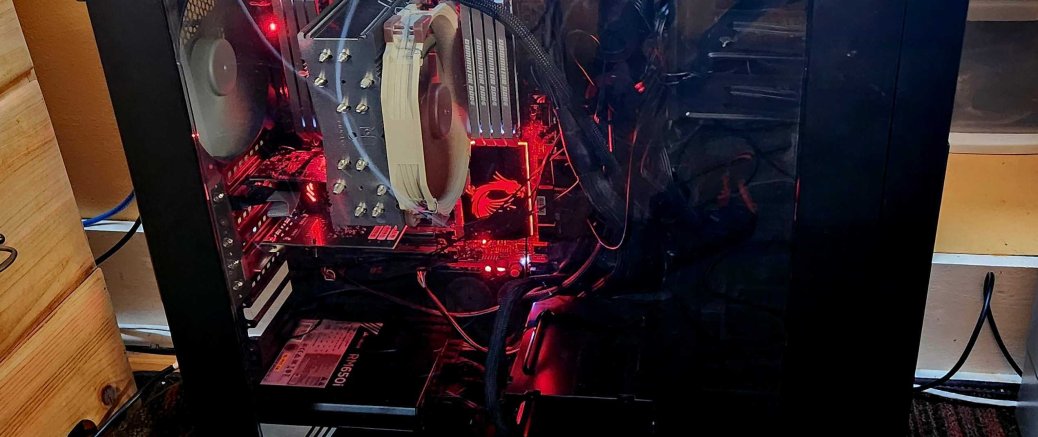

My "server" was my old gaming rig that has been repurposed. It's all SSD, with 128GB DDR4, and an i7 5820k processor. I installed a Dual Port Intel NIC and a low power video card.

What I think is cool:

Templating and cloning of actual hosts:

Spin up more hosts as needed, and test automations against them. I understand that you can nest Prox into Prox or ESXi etc. But that fake "Bare Metal" Layer just feels more like Datacenter to me, when configuring services like clusters, shared storage etc.

Snapshotting of the actual "physical" hosts:

Any changes to the hosts can be reverted easily. It's not a replacement for breaking something and learning how to fix it, but sometimes you just don't have time. The great part here is you can just keep trying to fix it over and over, even if you get in the weeds.

All disks/datastores are just thin VMDKs:

This I don't necessarily call a clear advantage, however, it brings up interesting backup and restore options. I tend to just sync these VMDKs nightly (actually all of the VM's host disks). Never has a cleaner restore situation been possible. Yes, it's a blanket restore if it's the datastores, but if you need them, they are there. You could also use snapshots as a solution and script the creation and deletion so they don't grow. Yes, it's another layer, but in this case, I would look at it as flexibility. I still implement best practice DR as if I were in the DC, but I have more options.

Experimenting with different storage types on hosts are easy (LVM-Thin pools, ZFS):

Because the disks are basically right click and add, rescan bus etc. I can provision and prototype different types of storage pools rapidly to POC different objectives. You can pull this off with nesting your storage in your mgmt hosts but things start to get sticky when you actually want to use it dependably.

The hardware interaction is more "generic":

As the hosts are VMs, there's a lesser chance of running into some hardware/firmware-related issues.

I have a pseudo bare metal "layer":

I kind of like having the pseudo bare metal layer from an architecture perspective; it just gives me more options.

That's about it! I don't believe anything I mentioned provides a clear advantage over bare metal. The question I usually ask myself, where do you want to spend your time, and what's the objective. The plan for the new lab is to get services running and start implementing some automation. If there are any reasons to migrate those services to bare metal hosts, I'll proceed accordingly. When implementing things, I prefer to get my hands dirty, and I appreciate the availability of multiple layers of snapshots. From a lab perspective, it allows me to more rapidly prototype things I am working on, and the amount of and the amount of RAM, and SSD the server has allows for this flexibility.